Facebook Has a Superuser-Supremacy Problem

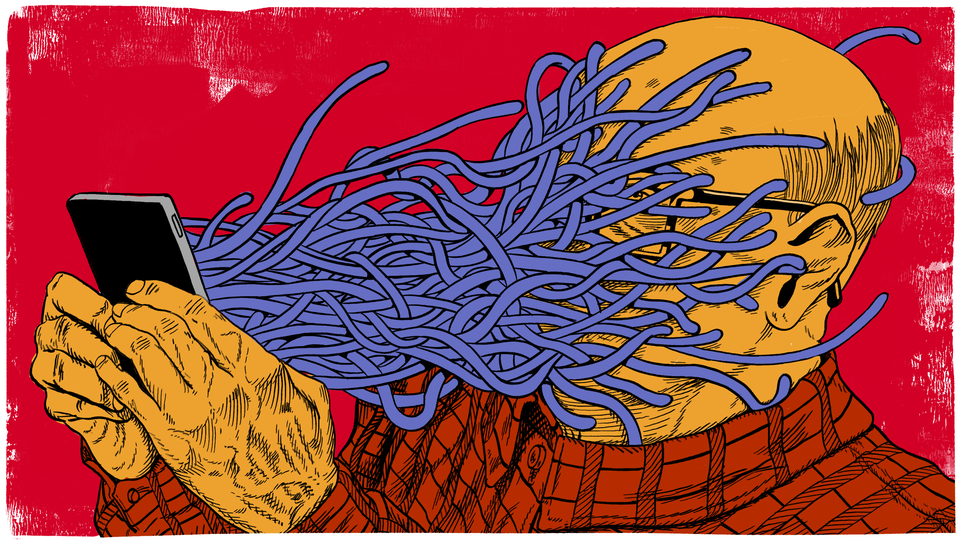

Most public activity on the platform comes from a tiny, hyperactive group of abusive users. Facebook relies on them to decide what everyone sees.

If you want to understand why Facebook too often is a cesspool of hate and disinformation, a good place to start is with users such as John, Michelle, and Calvin.

John, a caps-lock devotee from upstate New York, calls House Speaker Nancy Pelosi “PIGLOSI,” uses the term negro, and says that the right response to Democrats with whom they disagree is to “SHOOT all of them.” Michelle rails against the “plandemic.” Calvin uses gay as a slur and declares that Black neighborhoods are always “SHITHOLES.” You’ve almost certainly encountered people like these on the internet. What you may not realize, though, is just how powerful they are.

For more than a year, we’ve been analyzing a massive new data set that we designed to study public behavior on the 500 U.S. Facebook pages that get the most engagement from users. Our research, part of which will be submitted for peer review later this year, aims to better understand the people who spread hate and misinformation on Facebook. We hoped to learn how they use the platform and, crucially, how Facebook responds. Based on prior reporting, we expected it would be ugly. What we found was much worse.

The most alarming aspect of our findings is that people like John, Michelle, and Calvin aren’t merely fringe trolls, or a distraction from what really matters on the platform. They are part of an elite, previously unreported class of users that produce more likes, shares, reactions, comments, and posts than 99 percent of Facebook users in America.

They’re superusers. And because Facebook’s algorithm rewards engagement, these superusers have enormous influence over which posts are seen first in other users’ feeds, and which are never seen at all. Even more shocking is just how nasty most of these hyper-influential users are. The most abusive people on Facebook, it turns out, are given the most power to shape what Facebook is.

Facebook activity is far more concentrated than most realize. The company likes to emphasize the breadth of its platform: nearly 2.9 billion monthly active users, visiting millions of public pages and groups. This is misleading. Our analysis shows that public activity is focused on a far narrower set of pages and groups, frequented by a much thinner slice of users.

Top pages such as those of Ben Shapiro, Fox News, and Occupy Democrats generated tens of millions of interactions a month in our data, while all U.S. pages ranked 300 or lower in terms of engagement received less than 1 million interactions each. (The pages with the most engagement included examples from the far right and the far left, but right-wing pages were dominant among the top-ranked overtly political pages.) This winners-take-all pattern mirrors that seen in many other arenas, such as the dominance of a few best-selling books or the way a few dozen huge blue-chip firms dominate the total market capitalization of the S&P 500. On Facebook, though, the concentration of attention on a few ultra-popular pages has not been widely known.

We analyzed two months of public activity from the 500 U.S.-run pages with the highest average engagement in the summer of 2020. The top pages lean toward politics, but the list includes pages on a broad mix of other subjects too: animals, daily motivation, Christian devotional content, cooking and crafts, and of course news, sports, and entertainment. User engagement fell off so steeply across the top 500, and in such a statistically regular fashion, that we estimate these 500 pages accounted for about half of the public U.S. page engagement on the platform. (We conducted our research with a grant from the nonprofit Hopewell Fund, which is part of a network of organizations that distribute anonymous donations to progressive causes. Officials at Hopewell were not involved in any way in our research, or in assessing or approving our conclusions. The grant we received is not attached to any political cause but is instead part of a program focused on supporting researchers studying misinformation and accountability on the social web.)

Public groups differ from pages in several ways. Pages usually represent organizations or public figures, and only administrators are able to post content on them, while groups are like old-school internet forums where any user can post. Groups thus tend to have a much higher volume of posts, more comments, and fewer likes and shares—but they also follow a winners-take-all pattern, albeit a less extreme one.

Because the number of group posts is so much larger, we analyzed groups more intensely over two weeks within that same two-month time frame in 2020, looking at tens of millions of the highest-interaction posts from more than 41,000 of the highest-membership U.S. public groups.

Overall, we observed 52 million users active on these U.S. pages and public groups, less than a quarter of Facebook’s claimed user base in the country. Among this publicly active minority of users, the top 1 percent of accounts were responsible for 35 percent of all observed interactions; the top 3 percent were responsible for 52 percent. Many users, it seems, rarely, if ever, interact with public groups or pages.

As skewed as those numbers are, they still underestimate the dominance of superusers. Facebook users follow a consistent ladder of engagement. Low-public-activity users overwhelmingly do just one thing: They like a post or two on one of the most popular pages. As activity increases, users perform more types of public engagement—adding shares, reactions, and then comments—and spread out beyond the most popular pages and groups. As we look at smaller and smaller pages and groups, then, we find that more and more of their engagement comes from the most avid users. Complete coverage of the smaller pages and tiniest groups we miss would thus paint an even starker picture of superuser supremacy.

The dominance of superusers has huge implications beyond just our initial concern with abusive users. Perhaps the most important revelations that came from the former Facebook data engineer Frances Haugen’s trove of internal documents concerned the inner workings of Facebook’s key algorithm, called “Meaningful Social Interaction,” or MSI. Facebook introduced MSI in 2018, as it was confronting declining engagement across its platform, and Zuckerberg hailed the change as a way to help “connect with people we care about.” Facebook reportedly tied employees’ bonuses to the measure.

The basics of MSI are simple: It ranks posts by assigning points for different public interactions. Posts with a lot of MSI tend to end up at the top of users’ news feeds—and posts with little are, usually, never seen at all. According to The Wall Street Journal, when MSI was first rolled out on the platform, a “like” was worth one point; reactions and re-shares were worth five points; “nonsignificant” comments were worth 15 points; and “significant” comments or messages were worth 30.

A metric like MSI, which gives more weight to less frequent behaviors such as comments, confers influence on an even smaller set of users. Using the values referenced by The Wall Street Journal and drawing from Haugen’s documents, we estimate that the top 1 percent of publicly visible users would have produced about 45 percent of MSI on the pages and groups we observed, plus or minus a couple percent depending on what counts as a “significant” comment. Mark Zuckerberg initially killed changes to the News Feed proposed by Facebook’s integrity team, according to internal messages characterizing his reasoning, because he was worried about lower MSI. Because activity is so concentrated, though, this effectively let hyperactive users veto the very policies that would have reined in their own abuse.

Our data suggests that a majority of MSI on top U.S. pages came from about 700,000 users out of the more than 230 million users that Facebook claims to have in America. Facebook declined to answer our questions for this article, and instead provided this statement: “While we’re not able to comment on research we haven’t seen, the small parts that have been shared with us are inaccurate and seem to fundamentally misunderstand how News Feed works. Ranking is optimized for what we predict each person wants to see, not what the most active users do.”

Facebook’s comments aside, it is well documented that the company has long used friends’ and general users’ activity as the key predictor of “what users want to see,” and that MSI in particular has been Zuckerberg’s “north star.” Various reporting shows how Facebook has repeatedly tweaked the weights of different MSI components, such as reaction emoji. Initially five points, they were dropped to four, then one and a half, then likes and loves were boosted to two while the angry emoji’s weight was dropped to zero. As The Atlantic first reported last year, internal documents show that Facebook engineers say they found that reducing the weight of “angry” translated to a substantial reduction in hate speech and misinformation. Facebook says it made that change permanent in the fall of 2020. An internal email from January 2020 says that Facebook was rolling out a change that would reduce the influence strangers had on the MSI metric.

Our research shows something different: None of this tweaking changes the big picture. The users who produce the most public reactions also produce the most likes, shares, and comments—so re-weighting just reshuffles slightly which of the most active users matter more. Now that we can see that harmful behaviors come mostly from superusers, it’s very clear: So long as adding up different types of engagement remains a key ingredient in Facebook’s recommendation system, it amplifies the choices of the same ultra-narrow, largely hateful slice of users.

So who are these people? To answer that question, we looked at a random sample of 30,000 users, out of the more than 52 million users we observed participating on these pages and public groups. We focused on the most active 300 by total interactions, those in the top percentile in their total likes, shares, reactions, comments, and group posts. Our review of these accounts, based on their public profile information and pictures, shows that these top users skew white, older, and—especially among abusive users—male. Under-30 users are largely absent.

Because the top 300 were all heavy users, three-quarters of them left at least 20 public comments over our two-month period, and some left thousands. We read as many of their comments as we could, more than 80,000 total.

Of the 219 accounts with at least 25 public comments, 68 percent spread misinformation, reposted in spammy ways, published comments that were racist or sexist or anti-Semitic or anti-gay, wished violence on their perceived enemies, or, in most cases, several of the above. Even with a 6 percent margin of error, it is clear that a supermajority of the most active users are toxic.

Top users pushed a dizzying and contradictory flood of misinformation. Many asserted that COVID does not exist, that the pandemic is a form of planned mass murder, or that it is an elaborate plot to microchip the population through “the killer vaccination program” of Bill Gates. Over and over, these users declared that vaccines kill, that masks make you sick, and that hydroxychloroquine and zinc fix everything. The misinformation we encountered wasn’t all about COVID-19—lies about mass voter fraud appeared in more than 1,000 comments.

Racist, sexist, anti-Semitic, and anti-immigrant comments appeared constantly. Female Democratic politicians—Black ones especially—were repeatedly addressed as “bitch” and worse. Name-calling and dehumanizing language about political figures was pervasive, as were QAnon-style beliefs that the world is run by a secret cabal of international child sex traffickers.

In addition to the torrent of vile posts, dozens of top users behaved in spammy ways. We don’t see large-scale evidence of bot or nonhuman accounts in our data, and comments have traditionally been the hardest activity to fake at scale. But we do see many accounts that copy and paste identical rants across many posts on different pages. Other accounts posted repeated links to the same misinformation videos or fake news sites. Many accounts also repeated one- or two-word comments—often as simple as “yes” or “YES !!”—dozens and dozens of times, an unusual behavior for most users. Whether this behavior was coordinated or not, these throwaway comments gave a huge boost to MSI, and signaled to Facebook’s algorithm that this is what users want to see.

In many cases, this toxic mix of misinformation and hate culminated in fantasies about political violence. Many wanted to shoot, run over, hang, burn, or blow up Black Lives Matter protesters, “illegals,” or Democratic members of Congress. They typically justified this violence with racist falsehoods or imaginary claims about antifa. Many top users boasted that they were ready for the seemingly inevitable violence, that they were buying guns, that they were “locked and loaded.”

These disturbing comments were not just empty talk: Many of those indicted for participating in the January 6 attack on the U.S. Capitol also appear in our data. We were able to connect the first 380 individuals charged to 210 Facebook accounts; 123 of these were publicly active during the time our data set was constructed, and 51 left more than 1,200 comments total. The content of those comments mirrors the top 1 percent of users in their abusive language—further illustrating the risk associated with pretending that harmful users are just a few bad apples among a more civil general user base.

Recommendation algorithms change over time, and Facebook is notoriously secretive about its inner workings. Our research captures an important but still-limited snapshot of the platform. But so long as user engagement remains the most important ingredient in how Facebook recommends content, it will continue to give its worst users the most influence. And if things are this bad in the United States, where Facebook’s moderation efforts are most active, they are likely much worse everywhere else.

Allowing a small set of people who behave horribly to dominate the platform is Facebook’s choice, not an inevitability. If each of Facebook’s 15,000 U.S. moderators aggressively reviewed several dozen of the most active users and permanently removed those guilty of repeated violations, abuse on Facebook would drop drastically within days. But so would overall user engagement.

Perhaps this is why we found that Facebook rarely takes action, even against the worst offenders. Of the 150 accounts with clear abusive behavior in our sample, only seven were suspended a year later. Facebook may publicly condemn users who post hate, spread misinformation, and hunger for violence. In private, though, hundreds of thousands of repeat offenders still rank among the most important people on Facebook.